TRUST-AI, a project working on reliable artificial intelligence

Artificial intelligence techniques are having an increasing influence on decision making but their reasoning works through steps which are too abstract and/or too complex for users to understand. This means it is difficult for people to entirely trust AIs and so the aim of the TRUST-AI project is to make AI's choices more transparent and fair. The European Innovation Council has just awarded funding to this programme which includes members of the joint project team run with Inria TAU at the Laboratory for Computer Science (LRI - CNRS/Paris-Saclay University).

Artificial intelligence, and particularly deep learning, is producing ever more impressive results but this is achieved by using processes that are often difficult for human reasoning to follow. People talk of "black boxes" which find factually excellent solutions without actually being able to explain how they achieve these results. This brings up ethical questions at a time when AI is increasingly guiding choices. For example, if a diagnostic support algorithm influences a medical decision, patients and doctors have a right to know how the AI came to this conclusion. These choices may also favour certain people compared to others which raises fairness issues.

In this context, the European Innovation Council (EIC) has provided financial support to TRUST-AI1 in the framework of the new Horizon Europe programme. This project aims to develop AI solutions that are more transparent, fair and explainable which in turns makes them more acceptable. It is essential to understand more about what happens in these black boxes and also to ensure they produce unbiased results if users are to trust them.

The TRUST-AI consortium is coordinated by Gonçalo Figueira from the Institute for Systems and Computer Engineering, Technology and Science (INESC TEC, Portugal) and includes members of the University of Tartu (Estonia), the Dutch Research Council (NWO, Netherlands), the National Institute for Research in Computer Science and Control (Inria, France) and the APINTECH (Cypris), LTPlabs (Portugal) and Tazi (Turkey) companies. The programme began last October and has a budget of around four million euros for a four-year period.

- 1Transparent, reliable and unbiased smart tool for AI

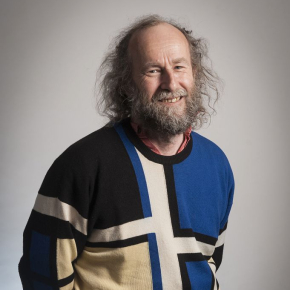

"The aim of TRUST-AI is to enable users to dialogue with learning algorithms to provide direction for the creation of models", describes Marc Schœnauer, Inria research professor at the Laboratory for Computer Science (LRI - CNRS/Paris-Saclay University). "To achieve that we will introduce human factors as soon as possible in the learning loop."

The researchers have opted for an approach which mixes enhancing the user interface, cognitive sciences and genetic programming. The latter method is among Marc Schœnauer's research themes at the LRI and is inspired by natural selection applied to trees with each branch representing a possibility of reasoning which is optimised to obtain the most efficient complete path. This technique makes it possible to track all the algorithm's choices and thus to present and explain such choices more easily. However, genetic programming does not work well on the large data sets used with deep learning methods. The idea is thus to use a dual approach featuring deep neural networks chosen for their performance and genetic programming for its explainability.

The aim of TRUST-AI is to be of very general use but three use cases have been selected. The first is the diagnosis of rare tumours in which algorithms help doctors choose the best time for surgery according to the tumours' development. This is a crucial decision that cannot be taken without understanding the reasoning behind it.

Applications are also possible in the management of stocks of goods, particularly fresh produce, to help optimise deliveries and anticipate possible delays. In this area, the absence of bias is the main issue because customers want to be sure that the algorithm will not favour a competitor. The final application theme is the prediction of energy consumption to optimise the activity of power stations and influence consumers by modulating prices. Here, citizens will want to know how new prices are decided. This is the core subject for TRUST-AI, namely to provide all the elements for trustworthy AI.